import rasterio as rio

import geopandas as gpd

from pathlib import Path

import rasterio.plot as rioplot

import matplotlib.pyplot as pltCOCO workflow

detectron2

path_to_data = Path('workflow_examples/')

train_raster = path_to_data/'104_28_Hiidenportti_Chunk1_orto.tif'

train_shp = path_to_data/'104_28_Hiidenportti_Chunk1_orto.geojson'

test_raster = path_to_data/'104_42_Hiidenportti_Chunk5_orto.tif'

test_shp = path_to_data/'104_42_Hiidenportti_Chunk5_orto.geojson'Example data is RGB UAV imagery from Hiidenportti, and the task is to detect and segment different deadwood types. The reference data are annotated as polygons, and target column is layer.

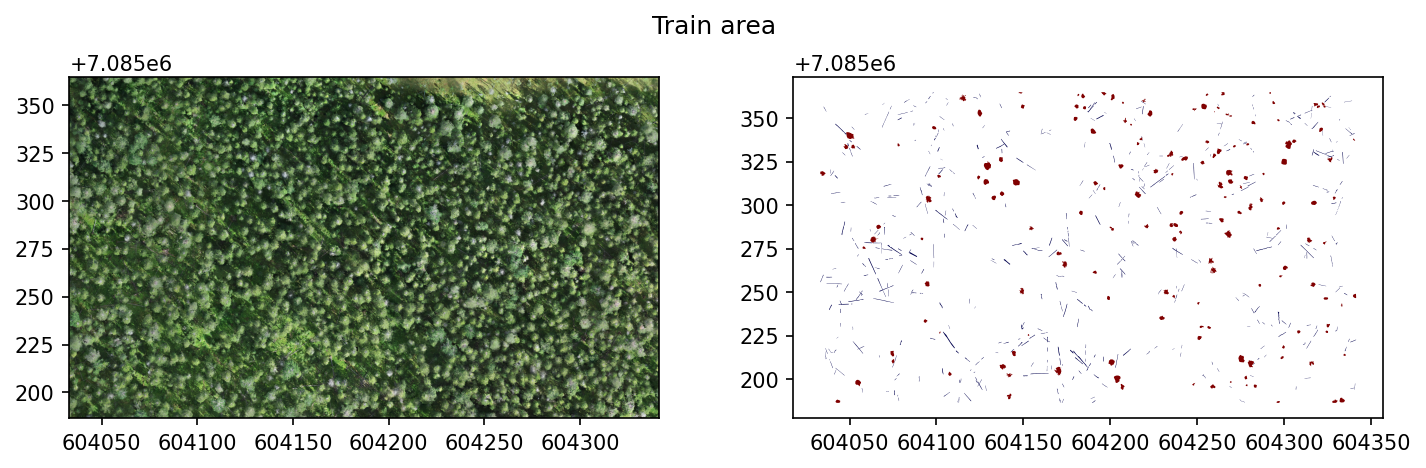

Training area looks like this.

fig, axs = plt.subplots(1,2, dpi=150, figsize=(10,3))

with rio.open(train_raster) as src:

rioplot.show(src, ax=axs[0])

train_gdf = gpd.read_file(train_shp)

train_gdf.plot(column='layer', ax=axs[1], cmap='seismic')

plt.suptitle('Train area')

plt.tight_layout()

plt.show()

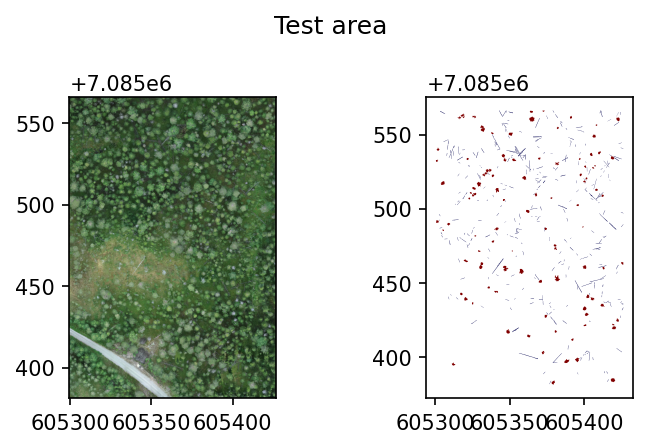

And test area looks like this.

fig, axs = plt.subplots(1,2, dpi=150, figsize=(5,3))

with rio.open(test_raster) as src:

rioplot.show(src, ax=axs[0])

test_gdf = gpd.read_file(test_shp)

test_gdf.plot(column='layer', ax=axs[1], cmap='seismic')

plt.suptitle('Test area')

plt.tight_layout()

plt.show()

Install required dependencies

In order to install detectron2, follow the instructions provided here.

Create COCO-format dataset

In this example, the data are split into 256x256 pixel tiles with no overlap. Also set the min_bbox_area to 8 pixels so too small objects are discarded.

CLI

geo2ml_create_coco_dataset \

example_data/workflow_examples/104_28_Hiidenportti_Chunk1_orto.tif \

example_data/workflow_examples/104_28_Hiidenportti_Chunk1_orto.geojson layer \

example_data/workflow_examples/coco/train example_train \

--gridsize_x 256 --gridsize_y 256 \

--ann_format polygon --min_bbox_area 8

geo2ml_create_coco_dataset \

example_data/workflow_examples/104_42_Hiidenportti_Chunk5_orto.tif \

example_data/workflow_examples/104_42_Hiidenportti_Chunk5_orto.geojson layer \

example_data/workflow_examples/coco/test example_test \

--gridsize_x 256 --gridsize_y 256 \

--ann_format polygon --min_bbox_area 8Python

from geo2ml.scripts.data import create_coco_datasetoutpath = path_to_data/'coco'

create_coco_dataset(raster_path=train_raster, polygon_path=train_shp, target_column='layer',

outpath=outpath/'train', output_format='gpkg', save_grid=False, allow_partial_data=True,

dataset_name='example_train', gridsize_x=256, gridsize_y=256,

ann_format='polygon', min_bbox_area=8)

create_coco_dataset(raster_path=test_raster, polygon_path=test_shp, target_column='layer',

outpath=outpath/'test',output_format='gpkg', save_grid=False, allow_partial_data=True,

dataset_name='example_test', gridsize_x=256, gridsize_y=256, ann_format='polygon', min_bbox_area=8)Dataset structure

Above creates the dataset to path_to_data/'yolo', so that it contains folders train and test. Both of these folders contain

- folder

images, which contains the tiled raster patches - folder

vectors, which contain geojson-files corresponding to each file inimages, if the location contains any annotations - file

coco_polygon.json, which is the annotation file and info for the dataset

Train the model

from detectron2 import model_zoo

from detectron2.config import get_cfg

from detectron2.data.datasets import register_coco_instances

from detectron2.engine import DefaultTrainer

from detectron2.evaluation import COCOEvaluator, DatasetEvaluators

import osFirst we need to register the datasets:

register_coco_instances(name='example_train', # the name that identifies a dataset for this session

metadata={}, # extra metadata, can be left as an empty dict

json_file=outpath/'train/example_train.json', # Annotation file

image_root=outpath/'train/images/') # directory which contains all the images

register_coco_instances('example_test', {}, outpath/'test/example_test.json', outpath/'test/images')And then modify the config file.

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("example_train",)

cfg.DATASETS.TEST = ("example_test",)

cfg.DATALOADER.NUM_WORKERS = 4

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.SOLVER.IMS_PER_BATCH = 4

cfg.TEST.EVAL_PERIOD = 100

cfg.OUTPUT_DIR = str(outpath/'runs')

cfg.SOLVER.MAX_ITER = 300

cfg.MODEL.ROI_HEADS.NUM_CLASSES

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)Next create a trainer. Here we use DefaultTrainer because of demo purposes.

trainer = DefaultTrainer(cfg)First the trainer must resume_or_load the checkpoint.

trainer.resume_or_load(resume=False)Then it can be trained:

trainer.train()For evaluation we need to build an evaluator to like the following:

results = trainer.test(cfg, trainer.model,

evaluators=DatasetEvaluators([COCOEvaluator('example_test',

output_dir=cfg.OUTPUT_DIR)]))Results are returned as OrderedDict:

resultsOrderedDict([('bbox',

{'AP': 26.524945427964656,

'AP50': 54.61366682355787,

'AP75': 23.264070383011905,

'APs': 25.132186571431554,

'APm': 21.41516566172374,

'APl': nan,

'AP-groundwood': 23.99694519893256,

'AP-uprightwood': 29.052945656996748}),

('segm',

{'AP': 24.178996439781,

'AP50': 50.930807248720775,

'AP75': 19.645957078082983,

'APs': 19.084138095771337,

'APm': 30.276207887160094,

'APl': nan,

'AP-groundwood': 18.747639759369484,

'AP-uprightwood': 29.610353120192507})])Other libraries

MMDetection is another commonly used library for object detection from COCO formatted datasets. According to these instructions, below should work:

# the new config inherits the base configs to highlight the necessary modification

_base_ = './cascade_mask_rcnn_r50_fpn_1x_coco.py

# 1. dataset settings

dataset_type = 'CocoDataset'

classes = ('Standing', 'Fallen')

data_root = /workflow_examples/coco/'

train_dataloader = dict(

batch_size=2,

num_workers=2,

dataset=dict(

type=dataset_type,

metainfo=dict(classes=classes),

data_root=data_root,

ann_file='train/coco_polygon.json',

data_prefix=dict(img='train/images')

)

)

...